Dataflow Hardware Acceleration of DNNs with High-Level Synthesis

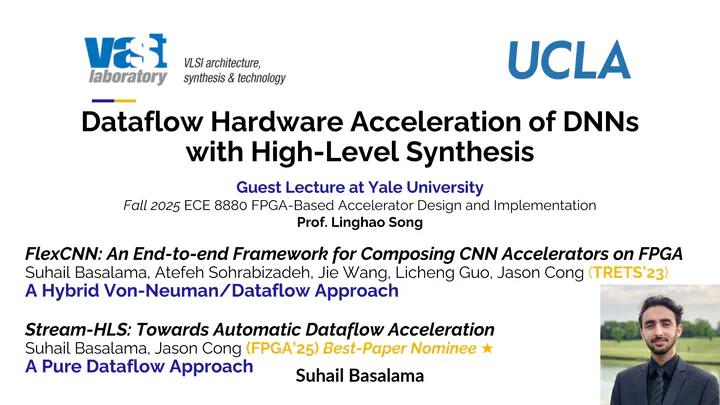

Dataflow Hardware Acceleration of DNNs with High-Level Synthesis — Guest Lecture at Yale University (Fall 2025)

This guest lecture, delivered by Suhail Basalama at Yale University for ECE 8880: FPGA-Based Accelerator Design and Implementation (Fall 2025) taught by Prof. Linghao Song, explores modern approaches to accelerating deep neural networks using dataflow architectures and high-level synthesis (HLS).

The talk introduces two complementary research projects from the UCLA VAST Lab:

FlexCNN (TRETS’23): An end-to-end framework for composing CNN accelerators on FPGAs, featuring dynamic tiling, data layout optimization, and composable systolic arrays. 📄 Read the paper (ACM TRETS 2023): https://dl.acm.org/doi/10.1145/3649409 🔗 FlexCNN on GitHub: https://github.com/UCLA-VAST/FlexCNN

Stream-HLS (FPGA’25, Best Paper Nominee): A pure dataflow compiler that automates multi-kernel streaming architectures through MLIR transformations, performance modeling, and mixed-integer nonlinear programming (MINLP)-based design space exploration. 📄 Read the paper (FPGA 2025): https://dl.acm.org/doi/10.1145/369471… 🔗 Stream-HLS on GitHub: https://github.com/UCLA-VAST/Stream-HLS

Together, these works demonstrate scalable, automated, and high-performance FPGA-based acceleration for AI workloads, bridging the gap between algorithm design and hardware implementation.